As the data economy grows and the pace of digital transformation accelerates, executives are contending with a business environment where dependable and flexible IT infrastructure is crucial. In turn, data storage costs continue to exponentially rise. How and where teams manage data significantly impacts company operations, and more importantly, business growth and performance.

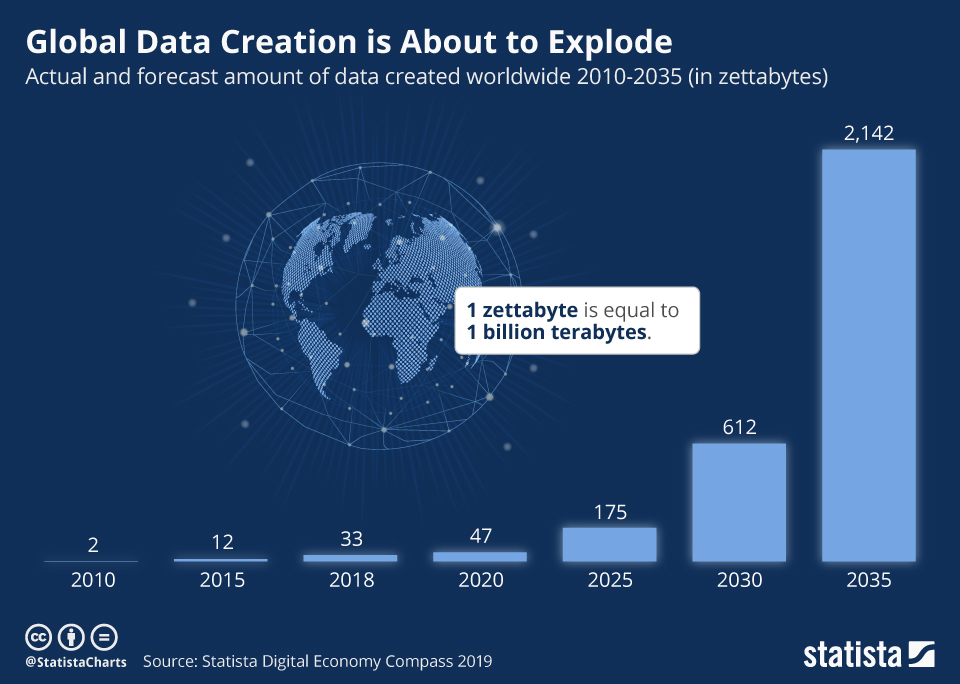

Chief among IT concerns is the cost of data storage. Recent research forecasts a 22% annual growth rate for cloud data storage – it’s doubling every four years. The International Data Corporation (IDC) predicts that the world’s data will surpass 175 zettabytes by 2025 – more than tripling the volume of stored data in 2020. That’s a lot of data – 1 zettabyte alone would consume enough data center space to fill roughly 20% of Manhattan. Businesses must now plan for petabyte-scale data storage, and factor data storage costs into their business plans.

Increased Storage Costs Outstrip Budgets

Among IT managers, 68% report storage costs as their main pain point when it comes to data storage. And budgets aren’t keeping pace with this data explosion.

Managing data storage has become a growing, and increasingly impactful, business expense. Cradle-to-grave planning through a sound information management lifecycle (ILM) strategy ensures that data storage practices achieve compliance standards while optimizing utility and reducing costs. But this is a topic for a future article.

A big part of controlling data storage costs is being aware of the fine print. Moving to an OpEx pricing model is one way to stabilize monthly expenses, but even with this approach, projecting capacity over time is only the beginning.

Migrating data to the cloud is a viable, scalable option. Many organizations come to the hard realization, though, that a particular cloud storage option is pricier than budgeted when monthly invoices are much higher than originally expected.

Depending on your use case, one cloud storage option makes more sense than another. If you’re looking to meet a compliance requirement and don’t intend to access the data often, if at all, then mainstream options might be a good fit. If there’s a level of criticality to the data with the potential need to utilize it in day-to-day operations or in case of a significant disaster, then other options should be considered.

To determine the best fit for a particular scenario, there are a range of factors in terms of technical and financial requirements that need to be vetted. Below are a few that contribute to the financial analysis:

•Performance speed at which you can access or recover data

•Data transfer charges (also known as egress): a per GB cost for pulling data from the cloud

•Transaction costs: variable cost per transaction for “puts and gets” (in other words, using stored data)

•Bandwidth cannibalization: Utilizing significant network resources for replicating data from the source to cloud storage

Few businesses think about needing to increase their network, and the associated costs for bandwidth, when they plan how and how often they’ll transfer data to and from the cloud. Evaluating options for bundled services including network, connectivity, and cloud solutions is one way to attack the issue.

Other hidden costs that create a serious dent in budget are transfer and transaction costs. These seemingly small moves can add up, sometimes doubling monthly cost and obliterating what should be a predictable OpEx model.

Object Storage Use Grows as Cost-Effective, Scalable Solution

Increasingly, object storage is used as a cost-efficient cloud option, especially for archival data. It’s more scalable and less costly per gigabyte than block and file options, which cost 3-4x more than object storage.

That’s one reason why, as global data volume grows by zettabytes, experts predict that 75% will be deployed as object storage.

In addition to the financial benefits of object storage solutions, there is a trend of these modern platforms being adopted for use cases beyond a secondary target for companies’ data retention requirements. Because the technology utilizes metadata (description) that is attached to the stored object, it allows for applications to easily scale and for users to securely access the remotely stored data. This enables the development of extended metadata customization that can be used to improve performance and management for specific applications.

On or Off-Prem?

As a company is planning a first object storage deployment, the first decision point is implementing internally or utilizing a cloud provider. Due to the economies of scale represented in the cloud provider marketplace, most companies conclude that deploying in-house doesn’t make sense.

Factoring in additional network and data center requirements, as well as the initial capital investment, usually points to an external provider. In choosing an object storage provider, many companies default to the hyperscalers (AWS, Azure, etc.) and forgo evaluating competitive alternatives with atypical cost models that reduce – or even eliminate – variable data usage and other costs.

The challenge of building a cost-effective data storage strategy will only become more complicated as datasets explode. Understanding that data management costs aren’t only about how many GBs, TBs, or in the future ZBs, of data you store, will be crucial to your data management strategy.

LightEdge Can Help You Meet Your Storage Needs

Whether you’re looking for a performance storage solution for your application data, a compliant place to retain and dispose of critical documents, or an immutable object storage solution due to rising ransomware concerns, LightEdge can offer the solutions you need at any of our geographically dispersed data centers.

Let’s work together to ensure your secure, managed, rapidly scalable data storage solution satisfies any workload and achieves maximum performance. The cost of data storage shouldn’t be a shock, and it shouldn’t be hidden.

To learn more about our hosting and cloud solutions, click here.